Tech & Democracy Roundup [10/13/25]

News, updates, and research from the intersection of Tech & Democracy

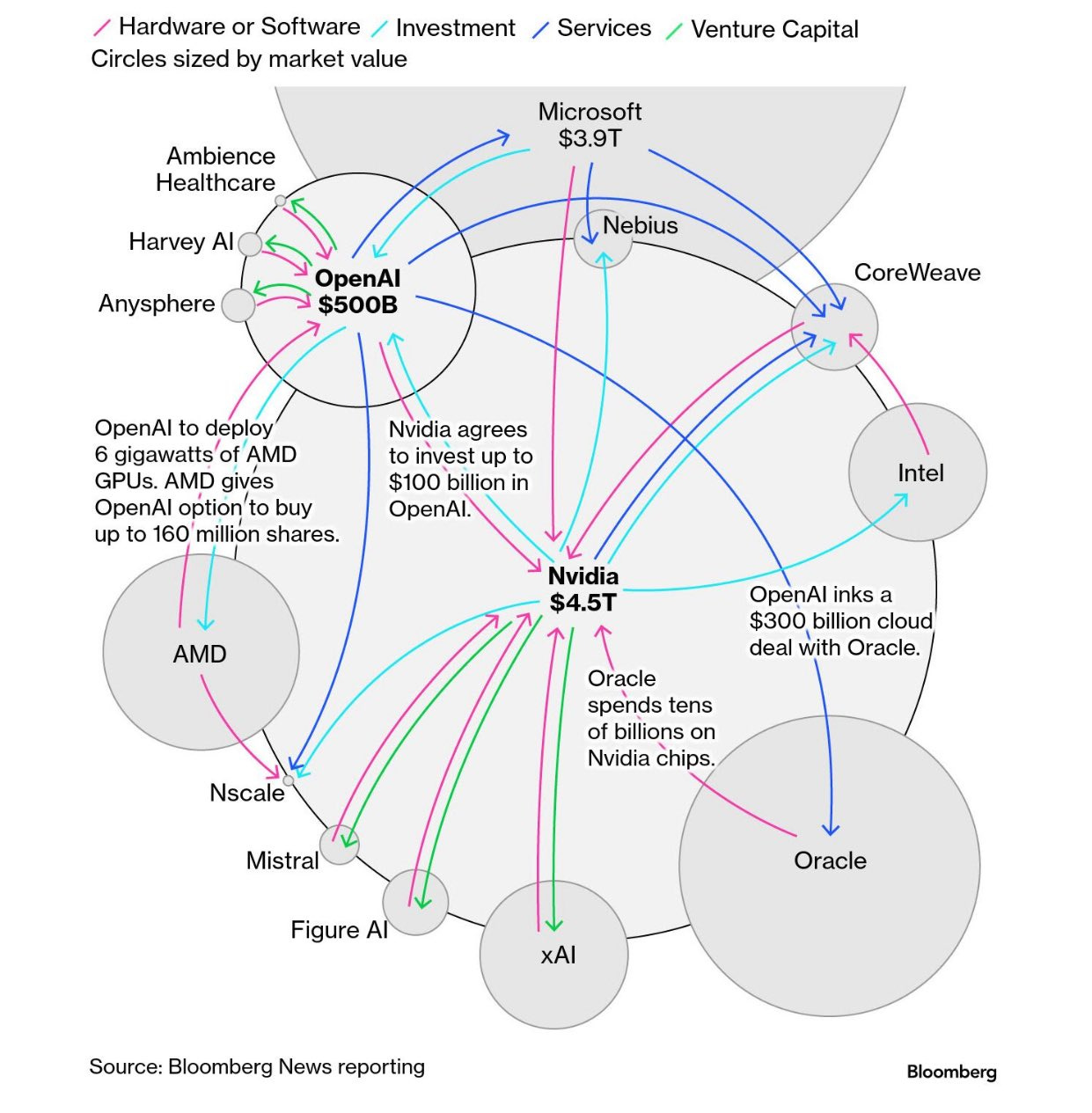

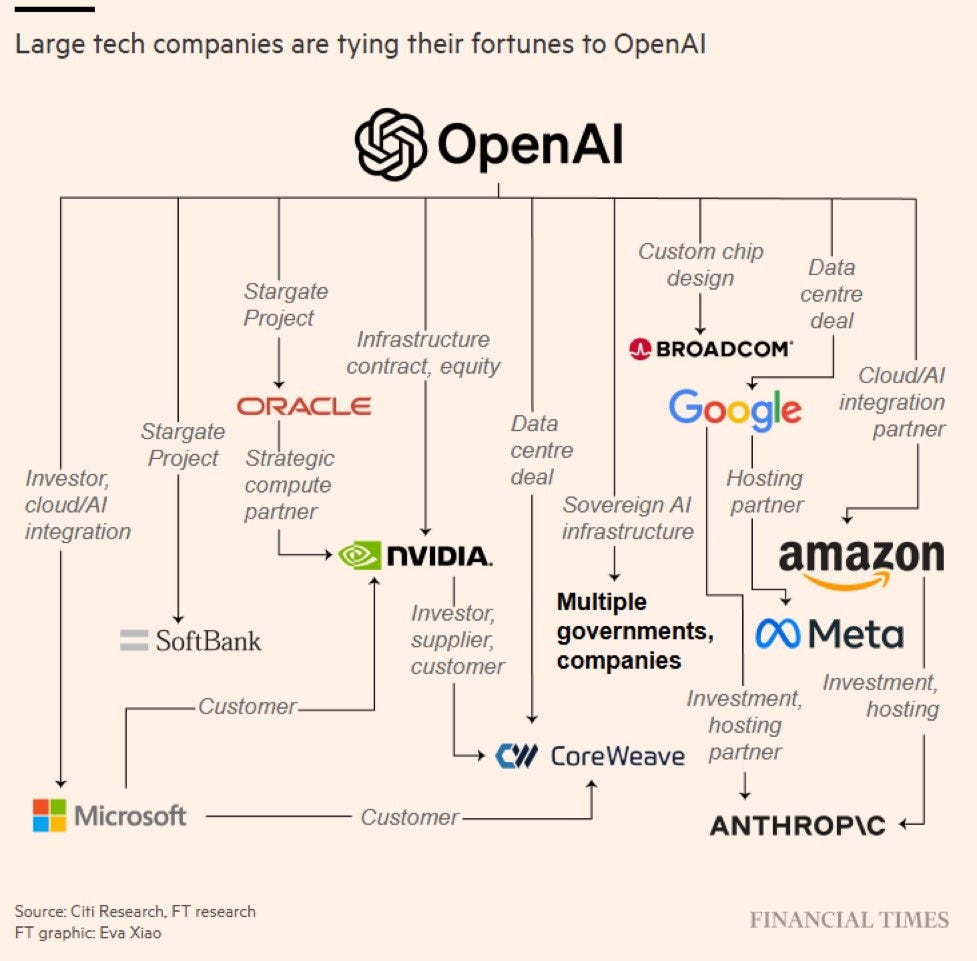

Seems like the entire American economy is now one big bet that rests entirely on faith in AI. Specifically, the OpenAI octopus is now quite thoroughly integrated and load-bearing across the tech sector:

OpenAI and AMD strike massive computing deal

OpenAI takes 10% stake of AMD

AMD stock up 43% this week

In other AI news, Elon Musk gambles on sexy AI companions

NYSE Owner to Invest Up to $2 Billion in Polymarket, takes a 20% stake

A Senate report released Monday says AI and automation could replace nearly 100 million jobs across various industries over the next decade.

For your attention…

“A Manifesto of the Attention Liberation Movement”

And in governance…

Governance strategies for biological AI: beyond the dual-use dilemma

Bruce Schneier TIME piece on how AI will unevenly impact the 2026 elections:

California signed landmark AI safety bill into law (SB53)

Requires developers of “large frontier” models to disclose on their websites a framework for how they approach safety issues

Requires companies to publicly release transparency reports with deployment of new models and updates

Makes it easier for members of the public and company whistleblowers to report safety risks but establishing mechanism for people to report to state’s Office of Emergency Services

Calls for creation of a consortium that will be tasked with developing a cloud computing cluster “CalCompute” to develop AI that is safe, ethical, equitable, and sustainable

This came out of previously failed SB1047. Anthropic supported, others didn’t openly support.

US rejects international AI oversight at UN General Assembly

Michael Kratsios, the director of the Office of Science and Technology Policy, said, “We totally reject all efforts by international bodies to assert centralized control and global governance of AI.”

President Donald Trump said in his speech to the General Assembly on Tuesday that the White House will be “pioneering an AI verification system that everyone can trust” to enforce the Biological Weapons Convention.

White House OSTP launched new public Request for Information for Regulatory Reform on Artificial Intelligence due October 27

Lastly— AI, slop, and the race to the bottom:

OpenAI releases a new version of the Sora video generation app and is preparing to launch a social app for AI-generated videos

As “AI slop” apps proliferate, David Foster Wallace remains dead

How can Open AI and Sam Altman maintain their sense of high moral ambition (build AGI, solve intelligence, save the world, unleash abundance and utopia) when they’re busy diving their noses into the attention slop-trough and releasing an AI-generated TikTok competitor?

AI-generated “actress” Tilly Norwood sparks backlash

Researchers detail 6 ways chatbots seek to prolong ‘emotionally sensitive events’

In a previous study, De Freitas found that about 50 percent of Replika users have romantic relationships with their AI companions.

In the latest research, bots employed at least one manipulation tactic in more than 37 percent of conversations where users announced their intent to leave.

Working paper on generative propaganda: https://arxiv.org/pdf/2509.19147

Broadens the conversation beyond deepfakes, sparks an interesting conversation around possible interventions

OpenAI lets users buy stuff directly through ChatGPT

Released a feature called instant checkout

As OpenAI tilts hard into race-to-the-bottom competition and monetization, can Anthropic maintain some kind of ideological integrity and stay relevant in the grand game? Or will every major player maximize competitiveness at the expense of safety, values, social externalities, and existential risk?

Many are worried that this dynamic is inescapable. But then, what does that mean for the AI race?

One of the most famous blogposts in Silicon Valley history is relevant here when thinking about ‘race-to-the-bottom’ multipolar trap problems: Slate Star Codex’s infamous ‘Meditations on Moloch’

~